Eyebot

Multimodal interaction, Arduino, Prototype

Use your eye to control the robot.

#1. Design Approach

First approach - for help building children's concentration

We did researches on attention control and children's ability to concentrate, and discovered a few

factors and principles of the cognitive process of attention control,

which is thought to be closely related to other executive functions such as working memory.

6-year-old: capacity to focus on a task for at least 15 minutes

9-year-old: capacity to focus the attention for one hour

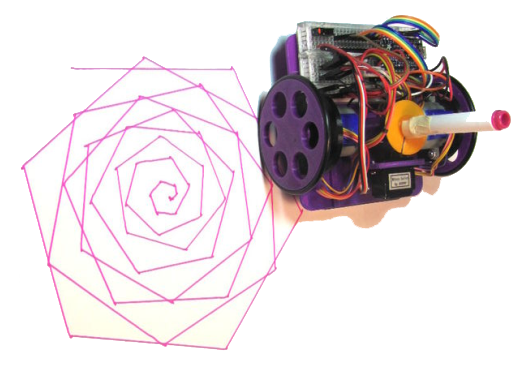

Therefore, we developed the idea of a "drawing robot" that the child needs to look at a piece of paper with the diagram they need to draw. Meanwhile, the robot will draw the same diagram that the child is "drawing" with his/her pupils.

Then, we did researches and prototyping with our classmates who acted as the users. And we found that the present design concept can be limiting the users, since the arduino robot is able to do more things. Therefore, we have the second design approach.

Second approach - for help building children's concentration and for disabled patients

Regarding the principle that "less is more", we decided not to give the users too many restrictions.

They will be free to use the eyebot in their own ways, and it also extends the project with

a lot more possibilities.

And we have always been trying to take control of objects additional to our hands. This approach would be

a solution to help disabled patients, regarding that some of them might only have their pupils

available to pass information. Meanwhile, children can still use it as a drawing robot.

Therefore, the main concept would be:

Use your eyes to control the robot.

#2. Prototype

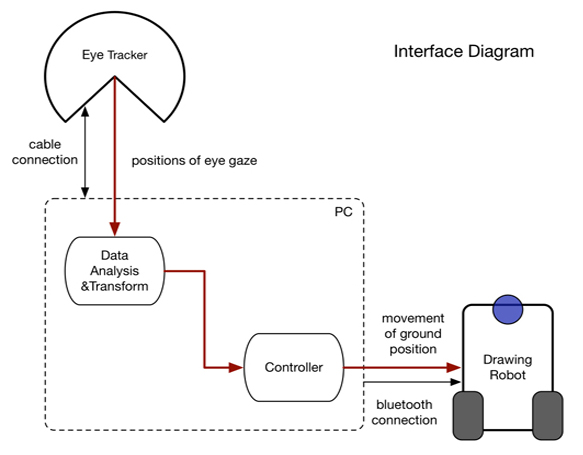

The project involves three kinds of interfaces, the eyetracker from pupil-labs, the arduino robot with bluetooth from SPECS lab and a PC as controller.

First, we get the gaze position data from the eyetracker, which is (x,y) position. Then the data is sent through local port to the processing program. We translate the gaze position data to make a good control of the robot and finally send the instructions to the arduino robot through bluetooth port.

#3. Demo

During the final demo session, it was concluded that the eyebot offers a good user experience for humans to manipulate the robot through their eyes. Other possibilities of the use of eyebot were also discussed and had varies extensions. It was an approach of embodied interaction and we hope it could enlighten some aspects that we will achieve in the future.

Some problems were also found during the testing. Such as: the tiredness of the pupils, the situation that you cannot see the robot while you're controlling. Further improvements shall be made.