Light Avatar

Multimodal design, Arduino, and I am the first author of the patent

#1. What problem are we solving?

During VUI related interviews and observations with users, we found a criticle issue always bothering them:

Modern vehicles are equipped with multi-zone systems to allow various passengers to take advantage of voice-activated features or engage in hands-free telephone conversations in different areas of the vehicle.

In systems where each person speaking has a dedicated zone close to the respective person's position, it can be difficult for a speaker and driver to know if the voice assistant has properly identified which zone the speaker is in, and what the current status of the voice assistant is.

For example, current practices require a speaker, who is often the driver of a vehicle, to quickly look at small display screens to obtain information, thereby taking their eyes off the road.

These problems can lead to the speaker driving dangerously, needlessly repeating themselves, waiting to speak, or speaking at the wrong time to issue a command or request to the voice assistant.

#2. How did we develop it?

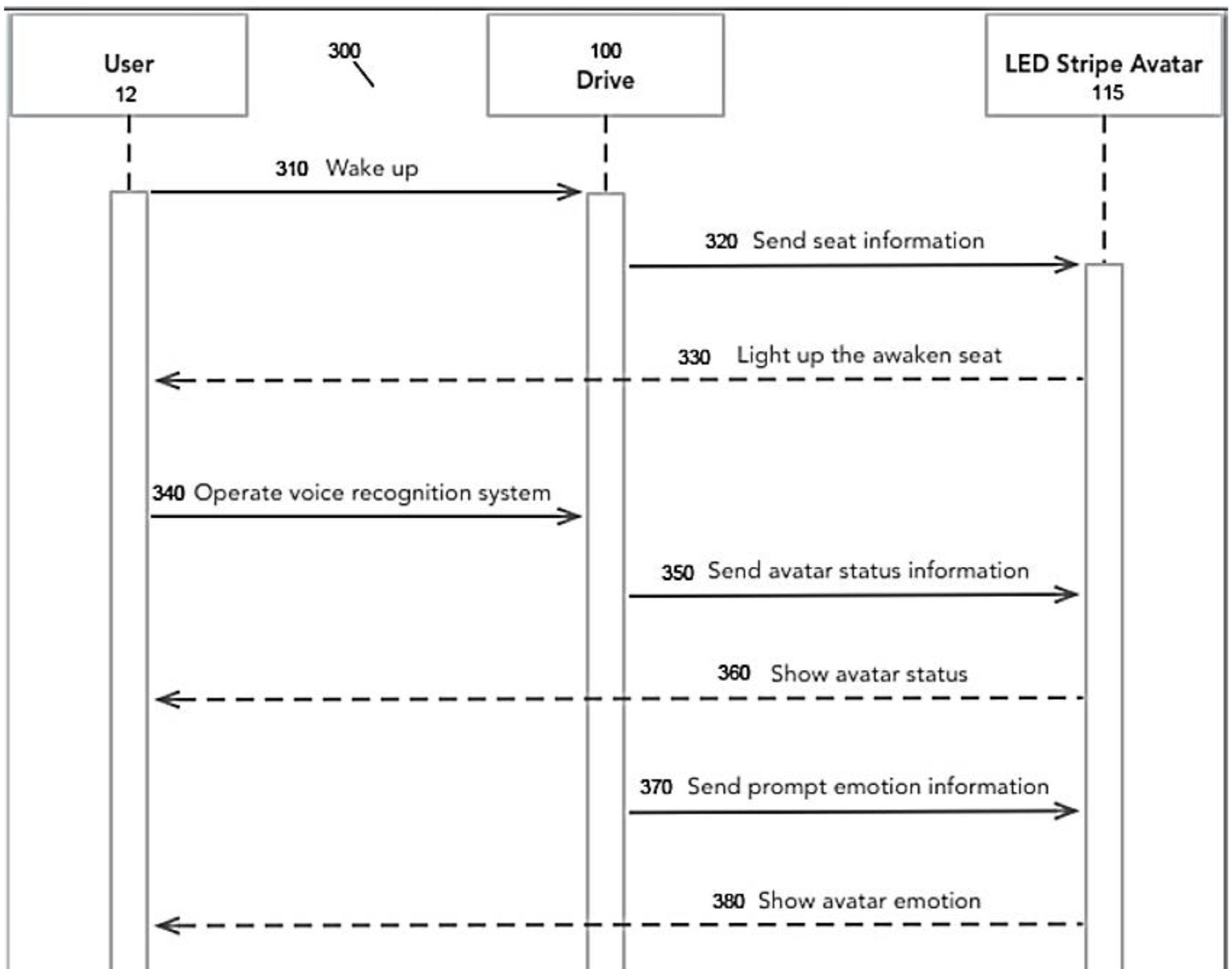

The system is composed of: the recognition system, Arduino board and LED stripe.

I was responsible for the design, and development of Arduino.

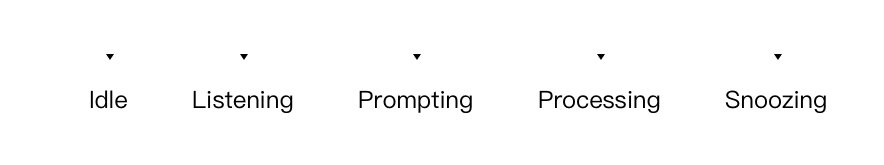

When user says a command, the recognition system revieves the information and sends it to the Arduino. Arduino will then give commands to the LED stripe to display various effects of lighting.

Meanwhile, we applied emotional desigin methods according to color psychology, which enables the LED avatar to express more information.

#3. How does it look like?

Here is a rough demo of the idea:

Finally, the project was successful embedded on a 6-seat MPV, and demonstrated to the public.